Agent007 The A* Scholar

Agent007: The A* Scholar Status Report

Status Report Video

Project Summary:

Environment:

A large flat world map with many random spawned items. 007 has a certain amount of time to grab as many high value items as possible. We are still debating on including mobs or pitfall spots or “point suck” holes on the map.

Current Status:

The current state utilizes the A* star algorithm and applies hueristics of shortest path from agent to items and hueristic of item value to grab close high value items for optimal solution. In further updates, we will employ a learned hueristic to look out for dangers on the map and look for potential path strategies that will lead to better outputs (removing the bias our paths take depending on agent spawn). There is a 10% chance a random move will be made. This is not optimal now since there is no learned search hueristic implemented yet, but this will be good in the future when our hueristic will learn from past runs (one static run won’t teach much).

Current Preformance:

Learning Hueristic:

Learning from experience can significantly improve the performance of search based agents; effectively we would encode experiences from past runs to guide search.

Clear advantage of employing learned hueristic on current problem:

Our current approach is using the static hueristics to find a suboptimal solution. There is a heavy bias on agent spawn and close local high value items. We wish to implement a learned hueristic process like bootstrapping or imitation or an experience graphto remove bias. Bootstrapping is an iterative procedure that uses learning to create a series of heuristic functions. Initially, this procedure requires a heuristic function h0 and a set of states we call the bootstrap instances. There are no solutions given for any instances, and h0 is not assumed to be strong enough to solve any of the given instances. Imitation is an efficient algorithm that trains heuristic policies by imitating clairvoyant oracles - oracles that have full information about the world and demonstrate decisions that minimize search effort. Experience graphs also work well for our problem, assisting search with experience from previous runs.

Potential Challenges We Can Add

- crafting

- “point drain spots”

- enemy MOBs

What can the AI algorithm do?

- crafting: dont know value of crafted item till after: Learn to go down some high craft item paths.

- “point drain spots”: Don’t know these spots before reaching them. Learn to avoid them.

- enemy MOBs: Paths that avoid mobs upon contact could do better.

Problems with the learning hueristic?

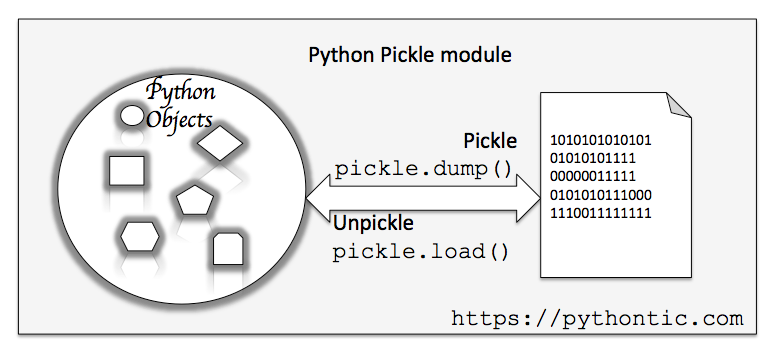

The learning heuristic is time consuming as it requires many saved trials. We will also need to optimize relationship between random moves, static hueristic, and between how many steps will the learned hueristic guide player movement. The way we plan to solve the long train time issue is serializing the dictionary of pastruns with a library like Pickle and loading to in for future runs. We will attempt many different combinations of random move percent/number of moves before learning huertic guides path to find optimal solution.

Implementation Strategy

Previous high scoring paths will be saved in a database, a similarity check between current path and prev paths will be implemented every x steps, highest close scoring best path will be chosen, repeat till end of timer. If current path good, add to DB.

Pseudocode:

1: procedure pastProcessingRuns:

2: PLDB <- buildDatabase(S,k) S is set of solved instances, k is samples per plan

3: PARTITIONDATABASE(PLDB,n) <- n is number of additional hueristics

4: procedure inGameProcessing:

5: input: Problem isntance P(i) consistent hueristic h0 (distance + reward)

6: FINDHUERISTICCANDIDATES(P(i), n)

7: PLANWITHMHA*

8: IF PLAN BEATS SCORE CRITERIA:

9: UPDATEDB

Sources:

- Learning Heuristic Functions For Large State Spaces

- Learning Heuristic Search via Imitation

- Learning to Search More Efficiently from Experience: A Multi-Heuristic Approach

Reports: